Uncovering disparities in automated speech recognition systems

Automated speech recognition (ASR) systems, which use sophisticated machine-learning algorithms to convert spoken language to text, have become increasingly widespread, powering popular virtual assistants, facilitating automated closed captioning, and enabling digital dictation platforms for health care. Over the last several years, the quality of these systems has dramatically improved, due both to advances in deep learning and to the collection of large-scale datasets used to train the systems. There is concern, however, that these tools do not work equally well for all subgroups of the population.

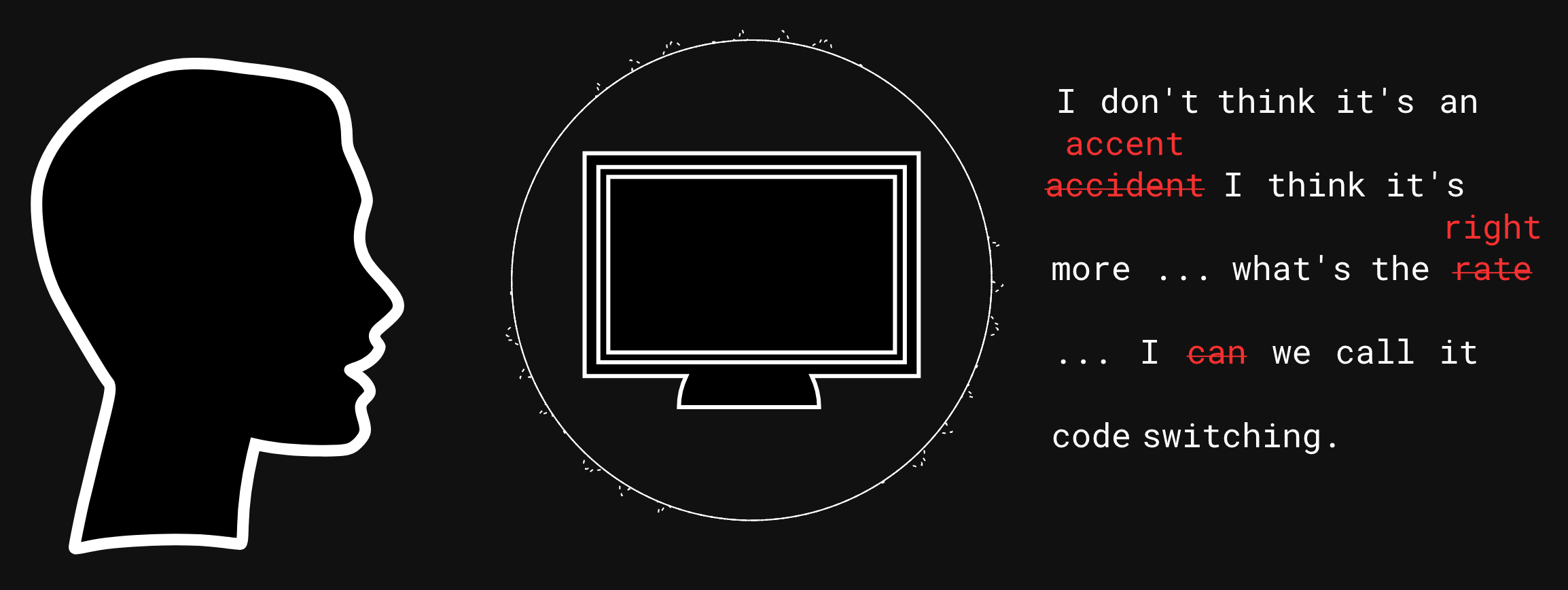

We studied the ability of five state-of-the-art commercial ASR systems to transcribe 20 hours of structured interviews with white and Black speakers. We found that all five of these leading speech recognition tools misunderstood Black speakers twice as often as white speakers. We traced these disparities to the underlying acoustic models used by the ASR systems, as the race gap persisted on a subset of identical phrases spoken by both white and Black individuals. These findings highlight the need for ASR systems to include more diverse training data, including audio samples of those speaking African American Vernacular English. More generally, our work illustrates the need to audit emerging machine-learning systems to ensure they are broadly inclusive.

For more information, visit the Fair Speech Project website.